The Vision SDK has now processed more than 1 billion images, identifying and refreshing 15M+ traffic signs in the United States. This covers almost every road sign across the 30 largest US metro areas, and more than 2 signs for every mile of navigable road. With every observation, our maps get more accurate and useful, and our refresh speed increases.

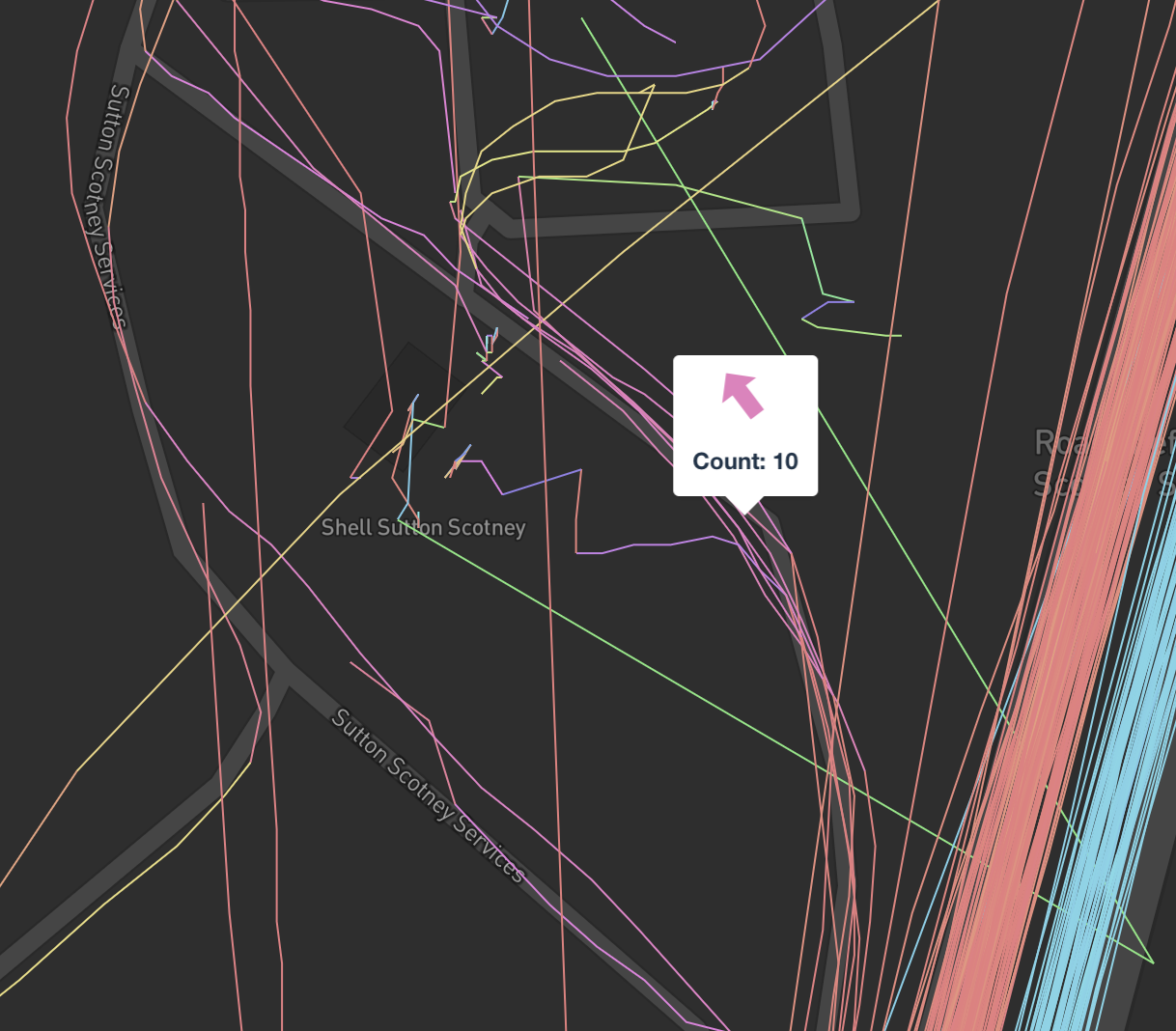

The collected data is ingested into the map and streamed to cars and phones with Navigation SDK based applications every 24 hours. This processing pipeline lets us spot newly opened or closed roads or changes in routing conditions as they happen. The volume of the data improves our accuracy — using Vision’s Dynamic Camera Calibration, multiple passes of a detection from different vehicles allows us to cancel out errors. For example, 9 separate detections on I-276 outside Philly were all used to localize the single speed-limit sign:

Vision and its Lane Detection API captures 700+ types of detections, from rich data about lane markings to traffic signs and control signals. Vision provides two different ML models — one optimized for performance on low power chipsets, and one optimized for quality on more expensive embedded systems.

Vision looks at the road from the perspective of the driver, using its computer vision to 1) expand the coverage area 2) add detailed road attributes like exact lane paths and 3) rapidly turn these observations into an updated live map.

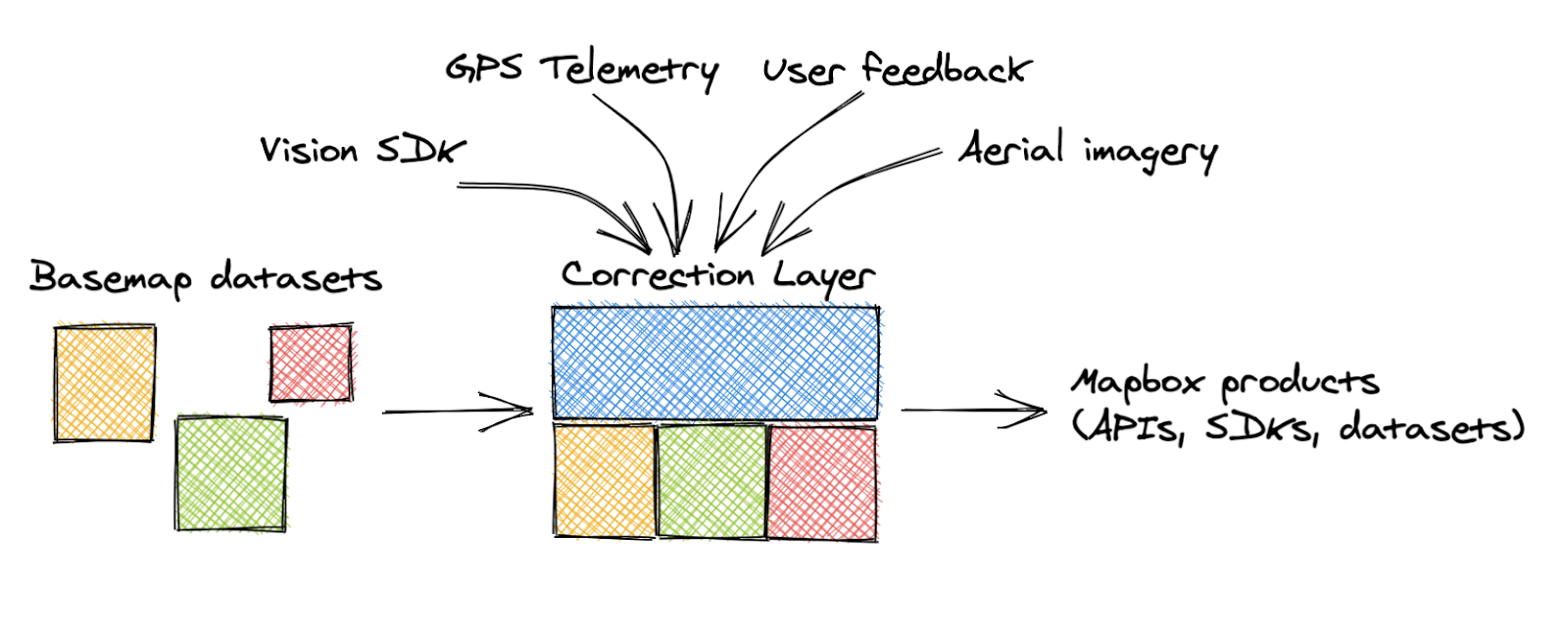

In addition to Vision, our data pipeline processes over 300 million miles of anonymized driving data every day, allowing Mapbox to continuously map everywhere in the world. The breadth of this pipeline allows us to offer more detailed maps and updated traffic coverage on more streets than existing datasets. Our Corrections Layer integrates both proprietary and open datasets — we generate corrections on top of raw basemap data from a variety of sources:

- Sign detections from the Vision SDK and streetside imagery

- Turn restrictions, missing roads, and one-way streets derived from location telemetry

- Features extracted from our global satellite and aerial imagery

- Edits based on customer feedback

The Mapbox Corrections Layer conflates all these data sources together to provide a single, canonical view of the world:

With the Corrections Layer, the map updates live based on the drivers’ actual behavior — identifying newly added streets and new routing restrictions as the number of lanes change to accommodate curbside pickups and to protect pedestrians.

Enhanced Location Engine: This improvement is measurable; drivers using the latest Navigation SDK experience more detailed navigation, reducing re-routes and unsafe maneuvers. Like all Mapbox APIs and SDKs, the Navigation SDK is available to developers as pay-as-you-go — no commitments needed. To allow fleets to align costs to each driver, the SDK has MAU (monthly active user) based billing, which includes all Directions and Maps API requests, combined into one consistent per driver price. Developers who wish to use the Directions API based billing may still do so by calling the APIs directly from within their apps.